The Case for AI Optimism

Concerns about generative artificial intelligence (AI) are running rampant. Technology-industry leaders have warned that certain AI systems "pose profound risks to society and humanity." AI researcher Eliezer Yudkowsky expects the "most likely result" of continuing to advance AI is that "literally everyone on Earth will die." The Guardian published an article with the headline, "Five ways AI might destroy the world." Another headline from CNN seems reassuring in comparison: "Forget about the AI apocalypse. The real dangers are already here."

Against that backdrop, concerns about the economic effects of generative AI might seem mundane. Yet industry leaders, economists, and the general public are all deeply concerned about AI's potential to significantly disrupt our economy. Elon Musk recently forecast that there "will come a point when no job is needed" because "AI will be able to do everything." A 2017 poll of academic economists found that nearly four in 10 believed AI would substantially increase the number of workers who are unemployed for long periods of time; only around two in 10 disagreed. A recent Gallup poll found that three-quarters of Americans believe AI will decrease the number of jobs in the economy. Even more nuanced concerns, including worries about the disruption caused by AI and its effects on workforce participation, are serious.

The root of these concerns is legitimate, but their magnitude is wildly overblown. The entire thrust of the debate about AI reflects an astonishing and unwarranted pessimism.

Recent advances in AI should generate optimism for, not fear of, the future. Yes, it will be disruptive. But workers in the United States have undergone multiple waves of disruptive technological change throughout history, and emerged better off as a result. Knowing that America's experiences with technology-driven disruption proved a net benefit should give us confidence about our ability to come out ahead of the coming AI revolution.

A TURNING POINT

It began in the final weeks of 2022 with the launch of OpenAI's chatbot, ChatGPT. Since then, AI has captured the imagination of students, teachers, CEOs, economists, politicians, and investors. Advances in natural-language processing existed before ChatGPT, of course: Users can speak to Amazon's Alexa as if it were a human being, and Gmail's autocomplete function can predict the words the user is about to type. But neither technology passed the Turing test — Alan Turing's 1950 proposal that the ultimate test of machine intelligence is whether its behavior could be distinguished from that of a person.

Seventy-three years later, machines passed the test. Today's generative AI systems can write a song or a poem that one would think was written by a human being. If told personal problems, generative AI can offer advice. These new tools can also outperform humans on various standardized tests, such as the SAT and the bar exam.

ChatGPT and other generative AI systems represent incredible advances in technology. But it is important to remember what generative AI is — and what it is not.

Generative AI systems are tools that can create new content, including text, images, computer code, and videos. AI is a general-purpose technology — a technology that will affect the entire economy on a global scale. The printing press, the steam engine, and electricity are canonical examples of general-purpose technologies. In recent decades, personal computers and the internet changed the way companies operate, workers work, and consumers shop, and enabled an explosion of complementary innovations. Generative AI will have a similar effect.

Fundamentally speaking, generative AI can produce outputs based on the information and patterns present in the data used to train it. It can look at thousands of medical images and determine, with great accuracy, which ones show that a patient has cancer. It can predict the next word in a sentence so well that you'd think the paragraphs it composes were written by a person. But because it learns by studying enormous numbers of examples rather than by following rules, AI is often wrong. And it is far from artificial general intelligence — it cannot match the full breadth of human intellect and imagination. Nor does it have human-level creativity or people skills.

As amazing as this technology is, its future is uncertain. We are still in the early stages of its development and diffusion. There is a consensus that generative AI is a major technological advance, but economists are divided over how quickly and widely it will be adopted and the extent to which it will boost productivity. At the time of this writing, only a small share of companies are using generative AI as a regular part of producing goods and services, and we have little evidence of AI in productivity statistics. But investment in AI-related hardware has surged since the beginning of 2023, foreshadowing future advances and disruption.

In short, the AI revolution has begun.

TECHNOLOGICAL ADVANCE AND EMPLOYMENT

At least since the 19th-century Luddite movement, there has been widespread concern that advances in technology will lead to substantial increases in the share of workers who are willing and able to work but cannot secure employment. This fear seems reasonable at first blush, but it rests on a zero-sum view of the economy that is empirically unsupported.

To be sure, as technology advances, new machines are able to complete some of the tasks that workers performed previously. But the amount of work to be done is never fixed.

Technological advances generally increase workers' productivity by allowing them to produce more goods and services for every hour they work. In this way, new technology increases the value of workers to firms, which will in turn compete for workers more aggressively in labor markets. This competitive process bids up workers' wages, causing their incomes to rise. As a result, workers' own demand for goods and services increases. As aggregate demand rises, businesses need more workers to keep up. Technological unemployment — job loss due to technological advances — is therefore avoided.

What's more, technological advances lead to new goods and services coming to market, and tend to increase the quality and variety of consumer goods and services available. This further increases consumer demand and, in turn, employers' demand for workers.

These theoretical arguments are rooted in empirical fact. The Industrial Revolution did not eliminate the need for workers. But we do not need to go back to the 1800s to study this phenomenon: Contemporary examples will be more persuasive to many than events from two centuries ago.

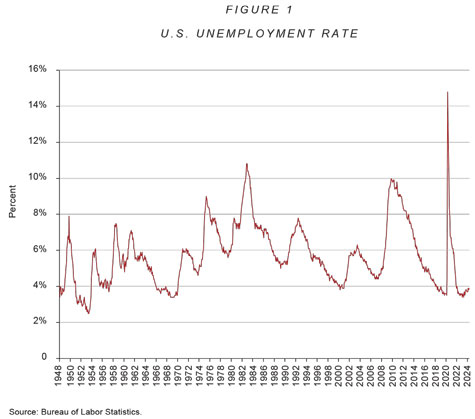

The past five decades of American history have witnessed breathtaking advances in technology, including the widespread adoption of the personal computer, the internet, and the smartphone, as well as advances in robotics in the manufacturing sector. Yet despite these advances, we do not see an upward trend in the unemployment rate over this period. These numbers indicate that it has not become systematically more difficult for workers to find jobs.

Of course, that doesn't mean the U.S. labor force escaped the impact of these changes. Indeed, the digital revolution has had a profound effect on American workers. We can see as much if we assign all occupations in the U.S. economy to one of three groups — low wage, middle wage, or high wage — and look at how employment has been spread across these groups over time.

Back in the 1970s, the number of jobs in each group were roughly equal. But by the middle of the last decade, only around one-quarter of all jobs were middle-wage occupations. This "hollowing out of the middle" has been one of the most important economic and social changes of the past half-century.

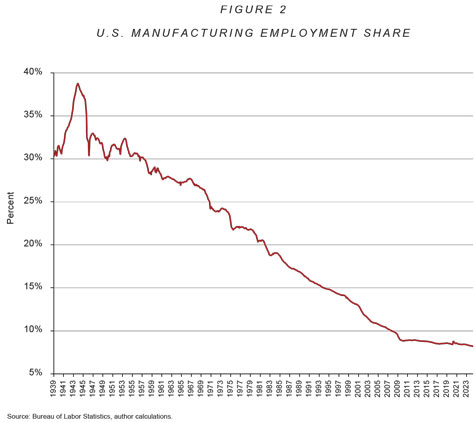

The manufacturing sector bore much of the impact. Between the end of the Second World War and 1980, manufacturing employment as a share of total employment fell from around one-third of all jobs to one in five. Today, that number is down to less than one in 10.

Though economic nationalists argue that this trend was caused by globalization, in reality the primary driver (by a large margin) was technological change: Advances in technology, which led to substantial gains in manufacturing productivity, displaced many middle-skill, middle-wage manufacturing workers.

These labor-market disruptions were not confined to manufacturing: New technologies also eliminated the need for many middle-wage administrative, office, clerical, construction, and manufacturing-production jobs. Instead of an administrative assistant, many white-collar workers now have Microsoft Outlook and voicemail. Instead of depositing a check by handing it to a cashier, people use ATMs or their smartphones, or make and receive payments electronically.

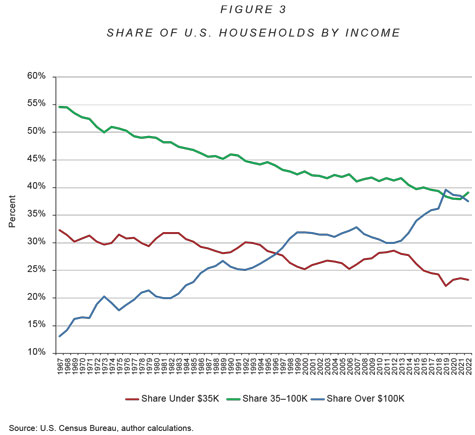

But as disruptive as this wave of technological change was, the past several decades have been a net positive for American households. In fact, the story of the last half-century is mostly a story of upward mobility.

To illustrate, the chart below shows that over the past five decades, the share of households earning mid-level incomes — defined here as between $35,000 and $100,000, adjusted for inflation — has indeed declined. But on the whole, more households now earn six-figure incomes than they did 50 years ago.

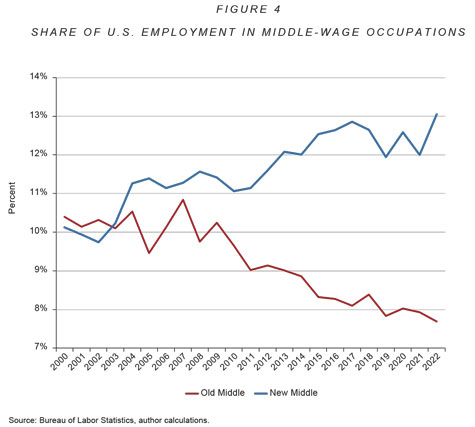

Meanwhile, although employment in middle-wage manufacturing and administrative jobs has fallen, a "new middle" is rising in its place. These growing middle-wage occupations include, among others, sales representatives, truck drivers, managers of personal-service workers, heating and air-conditioning mechanics, computer-support specialists, self-enrichment education teachers, event planners, health technicians, massage therapists, social workers, family counselors, paralegals, chefs, and food-service managers.

These jobs probably require a bit more education, more skills, and more experience than jobs in the old middle. They also involve more interpersonal interaction, meaning they call for workers with higher social intelligence, situational adaptability, and technical and administrative skills. But they do provide a pathway to the middle class.

Just as the typical individual is better off thanks to the technological changes of recent decades, so too is the typical place. In his inaugural address in 2017, then-president Donald Trump spoke of "American carnage," referencing "rusted-out factories scattered like tombstones across the landscape of our nation." The president was tapping into a powerful political constituency shaped in part by changes in employment across occupations. His words, however, exaggerated the conditions on the ground. Today, a solid majority of counties with a disproportionately large share of manufacturing jobs in 1970 have strong local economies. Six in 10 of those counties have successfully transitioned to new industries, while 23% exhibited solid economic performance over the prior two decades while maintaining a large manufacturing sector.

To say that the digital revolution was a net positive is not to say that it was an absolute positive for everyone. The disruption of recent decades created winners and losers among workers and communities. The people and communities made worse off receive the lion's share of attention from policymakers and the public, and rightly so. Policymakers did not do enough to extend economic opportunity to workers displaced by the digital revolution. Keeping the spotlight on those workers is a necessary step in making sure policymakers do not repeat the same mistakes with the AI revolution.

But it is important not to confuse the experience of those who have been left behind by technological change with the experience of typical workers and households, for whom the United States' recent experience with technology-driven disruption was a net benefit. The coming wave of AI-driven disruption will likely have a similar effect.

GENERATIVE AI AND THE LABOR MARKET

Generative AI will undoubtedly disrupt our economy. Since we do not know how the technology itself will evolve and develop, how quickly and to what extent businesses will use it, and what social and political factors will affect how it spreads throughout the economy, the precise nature of that disruption is difficult to predict. However, we can attempt to describe the way generative AI will affect employment at a high level of abstraction.

To start, consider a stylized example from the digital revolution of the recent past. Imagine a bank four decades ago, and assume for the sake of exposition that the bank has three employees: a custodian, a cashier, and a CEO. Technology advanced. What happened?

The cashier was the most directly affected. ATMs could deposit checks and execute withdrawals more accurately and cost-effectively than a human cashier. Many of the tasks that cashiers performed before the digital revolution were automated and carried out by these ATMs. More recently, smartphones have made ATMs all but obsolete.

The CEO was also affected, but positively. These individuals initially had access to software (e.g., Microsoft Office), and later to the internet and smartphones, that they could use to make better, more informed decisions. This allowed them to do their job more effectively than would have been possible before the digital revolution.

As for the custodian, new robotic technologies developed in recent decades can perform certain tasks on an assembly line, but they cannot go from office to office in a bank and empty trashcans or vacuum carpets. So the custodian's job remained much the same despite new technology.

As this example shows, the technology of the digital revolution excelled at completing tasks that could be described explicitly, in a series of steps. Software and robots can do precisely the same thing — deposit a check, screw on a hubcap — over and over again, in exactly the same way each time, with perfect accuracy, and at much less expense to businesses than a human worker. Consequently, people who held jobs that involved executing the same series of steps over and over again were displaced. At the same time, jobs that involved creativity, problem solving, and interpersonal skills saw wage increases because the new technology made workers in those jobs more productive, and therefore more valuable to businesses. Jobs that involved situational judgment and fine motor skills, like that of the custodian, were relatively unaffected.

The types of jobs that required executing the same series of steps with strict precision before the digital revolution tended to be those that called for a middling level of education and paid middle-class wages. Despite their vulnerability to automation, they were not low-wage jobs precisely because they required the accurate execution of procedures, along with the education and experience required to repeatedly and meticulously execute those procedures. Lower-wage jobs — whose tasks did not require the same degree of precision — were relatively less affected. Finally, high-wage workers were able to use computing advances to increase their productivity, and therefore their wages. Hence the "hollowing out of the middle" and Trump's first inaugural address.

With that in mind, what can we expect from the AI revolution?

The difference between the jobs affected by the digital revolution and the AI revolution will be driven by the differences between the two technologies. In 1966, philosopher Michael Polanyi observed that "we know more than we can tell." He was referring to tacit knowledge: our ability to ride a bicycle, tell a captivating story, make a persuasive argument, recognize faces, and interpret body language. Human beings do these things every day, yet we do not understand explicitly how we do them. Economist David Autor refers to the dawn of generative AI as "Polanyi's revenge": "computers now know more than they can tell us."

In recent decades, computers and robots have been able to masterfully execute a defined series of steps but have struggled to complete tasks that human beings who write computer programs have a hard time describing in such steps. It is straightforward to write down the list of steps a cashier needs to go through in order to deposit a check; it is extremely difficult to write down a list of steps that would allow a computer — or a human being — to accurately recognize an adult's face in a childhood picture, or to make a persuasive argument. Yet humans perform these tasks regularly. Advances in AI technology mean that computers are now able to perform tasks that humans perform not just through explicit processes, but through tacit processes as well.

A breakthrough occurred when scientists moved away from efforts to write lists of explicit steps and instead pursued an inductive approach: training AI systems using examples. Though this requires an enormous number of examples and substantial computing power, this statistical, predictive approach of imbuing AI systems with tacit knowledge has proven wildly more successful than attempting to formalize such processes as facial recognition and making a persuasive argument — and many other things — through explicit rules.

Because generative AI systems can execute tasks without having to follow a series of specific steps — in short, because they can acquire tacit knowledge — they are able to do much more than computers have been able to do in the past. AI models can generate graphic art, write computer code, and compose musical lyrics. Large language models can write essays that a reasonable reader would think were written by a person.

From these distinctions, what can we say more specifically about the nature of AI-driven labor-market disruption?

The best answer is "very little." At the time of this writing, the application that launched the current AI revolution — ChatGPT — is 19 months old. We are still in the early stages of generative AI development, and we do not know how the technology will evolve. Economists have not yet produced a body of evidence that can guide forecasts about the economic effects of AI. We do not have a good sense of how quickly and to what extent businesses will use the technology, and we do not understand the social and political factors that will shape its diffusion. Above all, we do not know the new goods and services that will be created using AI technology, and the occupations that will be created and filled by humans to produce those goods and services.

Economist Erik Brynjolfsson and computer scientist Tom Mitchell have articulated a compelling list of the types of tasks to which AI tools are well suited. The first, as mentioned above, is mapping well-defined inputs to well-defined outputs. This capability is what enables AI systems to map an adult's face to a childhood photo. Another important application is evaluating diagnostic imagery — reading CT or PET scans to diagnose cancer and other ailments. Brynjolfsson and Mitchell argue that AI tools work particularly well when the goal of a task is clear, even if the best process to achieve the goal is not clear — optimizing traffic flow throughout an entire city, for instance. Because AI systems make errors, the tasks most suited for AI tools are those in which an error is not a catastrophe.

Brynjolfsson, Mitchell, and economist Daniel Rock applied this rubric to evaluate the potential for applying AI tools to over 18,000 tasks, 2,000 work activities, and 900 occupations. They concluded that most occupations in most industries involve at least some tasks that AI systems can perform, but few if any can be done entirely via AI.

Additional evidence for forecasting AI's effect on the labor market comes from the astonishing success of AI systems in image recognition and in passing exams for professional licensing. It is reasonable to speculate that AI systems will be able to perform many tasks — diagnosing medical conditions, writing documents, synthesizing research, producing presentations, and more — at much less expense to businesses than human employees. These intellectual services are currently performed by workers who are relatively well educated and earn higher incomes, in contrast to the middle-skill, middle-wage occupations that were most heavily disrupted by the digital revolution. It's reasonable to guess at this point that these higher-wage workers will be the most exposed to the AI revolution's creative destruction.

NEW WORK

Even if we had a clear sense of the potential reach of AI, we would need to be careful about projecting the character of its effects.

Here, too, the examples of recent technological changes are instructive. As noted above, the digital revolution meant that ATMs could execute the core functions of a 1970s-era bank teller — taking deposits, dispensing cash, etc. — with more precision and at less expense than a human employee. As a result, banks shifted those functions from tellers to machines. But as the technology scholar James Bessen has demonstrated, this did not lead to a large reduction in the number of bank tellers. In 1990, there were around 100,000 ATMs and 500,000 bank tellers in the United States. In 2010, there were about 400,000 ATMs and just under 600,000 bank tellers.

ATMs reduced the cost of operating a bank branch in large part by nearly halving the number of tellers required to work in each branch. So why did the number of bank tellers rise rather than fall? It turns out that banks used the money they saved by installing ATMs to open additional branches — each of which required tellers.

What's more, ATMs changed what bank tellers do. Tellers became relationship managers, handling customers whose needs were more complex than those with checks to deposit or withdrawals to request. The interpersonal skills required to make customers feel welcome when they enter branches and to handle difficult or complex situations became more important than the ability to accurately and repeatedly execute deposit and withdrawal procedures.

Something similar is likely to happen with AI over the next few decades. To illustrate, we can think of jobs as bundles of tasks. Generative AI systems will perform some of those tasks at less expense and with greater efficacy than human workers, while others will augment workers' ability to carry out other tasks, thereby increasing productivity. Consider three examples — presented with a significant degree of uncertainty given that we are at the early stages of the AI revolution — that illustrate how this might work.

First, let's take the case of lawyers and paralegals. These individuals will need to spend much less time writing briefs and classifying documents — two tasks that large language models will be able to perform — than they do now. This will give them more time to spend interviewing witnesses and developing legal strategy. AI tools will help lawyers complete these tasks by proposing potential questions to ask witnesses and lines of argument to support a broader strategy. But AI will not be able to effectively interview witnesses or set the strategy itself. Some law firms experimenting with AI tools today are finding it is allowing junior associates to advance faster because it is so efficient at performing basic legal research, thereby jump-starting careers.

Second, consider the case of physicians. Because AI systems will be able to read and interpret scans and test results more effectively and inexpensively than humans can, physicians will need to spend much less time performing those tasks. AI tools will also be able to record and update patient information in medical charts and records by listening in when physicians are examining patients. This will allow physicians to spend more time communicating with patients, thereby increasing the quality and effectiveness of those conversations. For advanced illnesses, it will grant physicians more time to coordinate with other physicians to manage care comprehensively.

Retail-store managers — a third example — will need to spend less time managing employees' schedules and the cycle of inventory; AI tools will be able to complete those tasks for them. This will give managers more time to oversee and coach workers, solve problems, and create a positive shopping experience for customers. AI will also assist managers by making suggestions to optimize the shopping experience in the store and proposing potential management strategies based on an employee's career history and other factors.

Creative destruction creates as well as destroys. The AI revolution will create many opportunities that we cannot conceive today. Standing in the year 2024 and trying to predict the jobs of the future is no easier than standing in the year 1944 and trying to predict that the labor market of the future would contain systems analysts, circuit-layout designers, fiber scientists, and social-media managers. In fact, about 60% of jobs held by workers in 2018 had not been invented as of 1940. New occupations emerge in large part because technology advances, creating new goods and services that in turn require human workers to engage in new occupational tasks. Technological advances also make society wealthier, increasing the demand for goods and services — especially new goods and services — which in turn raises the demand for workers' skills, talents, and efforts.

To illustrate, imagine trying to explain to the 19th-century classical economist David Ricardo the jobs of all the people who support Bruce Springsteen's records and tours: sound engineers, digital editors, graphic designers, photographers, videographers, art directors, instrument technicians, social-media directors, marketing professionals, bookers, stage hands, sound directors, lighting engineers, body men, commercial-vehicle drivers, and, of course, the jobs of the members of the mighty E Street Band and Mr. Springsteen himself. These occupations and the tasks workers perform for them did not exist in Ricardo's time because the technology that enables them had not been invented. They also did not exist because the wealth created by today's technology had not been generated: Society in Ricardo's day could not have afforded rock bands.

Although we cannot make specific predictions of the economic effects of AI with any accuracy, evidence from the past should allow us to make two general predictions with a high degree of confidence: that AI technology will be disruptive, creating winners and losers in the labor market in part by creating entirely new occupations; and that the net effect of AI advances will be to increase workers' productivity, wages, and incomes, which will benefit them overall.

DON'T SLOW THE CLOCK

Generative AI will continue to advance, and the world of 2034 will be different than the world of 2024 because of it. While we should be confident that AI will not cause substantial technological unemployment and will generate a net benefit for typical workers and households, we cannot be as sanguine about its disruptive effects.

Economists are often too quick to dismiss disruption. Many economic historians believe that the living standards of the British working class did not improve for decades following the start of the Industrial Revolution. Entire occupations were wiped out, and wages fell dramatically for some of the jobs that survived. People flocked from the countryside into dirty, unsanitary cities to take factory jobs characterized by debasing discipline and hazardous working conditions.

These events shook British society to its core. Karl Marx and Friedrich Engels famously argued that "constantly revolutionising the instruments of production" had caused a revolution in the "whole relations of society." "All that is solid," they declared, "melts into air." Charles Dickens began publishing David Copperfield the year after Marx and Engels released their Communist Manifesto. The London of this era — with its filth, poverty, and poor working conditions — was itself a Dickensian character. Marx walked the streets of Dickens's London, directly observing how technology was upending society.

The digital revolution was disruptive in the ways discussed above, but it was less disruptive than the Industrial Revolution. Part of the reason was that advanced democracies had policies in place during the 20th century that provided a safety net for the workers and households who needed it. Even so, American policymakers did not do enough to extend opportunity to all workers dislocated by the new technology. We should do better with the generative-AI revolution.

The policy debate over generative AI is occurring at a high altitude because we are in the early stages of its development. But any policy changes should be guided by three broad principles.

The first begins with recognizing that technological advances are beneficial to society, and that we should not try to slow their pace. The world would be much worse off if policymakers had tried to hold back or shape the new technologies of the industrial and digital revolutions; we owe it to our future selves and future generations not to do so with generative AI. We should instead take most technological change as exogenous — as a given that will develop according to the science and the imaginations of innovators — and design policy around organic technological advances. Policymakers should try to soften the short-term disruption from technological change, but not at the expense of denying workers and households the vast long-term benefits that technological advances will bring.

The second principle is that participation in economic life should be encouraged. Work is a positive good. For many people, contributing to humanity through market activity is key to building a full and flourishing life. Work brings people together through shared goals and experiences, and binds them to their communities. It builds a prosperous society characterized by mutual contribution, dependence, and obligation.

Policymakers designing ways to assist displaced workers should be careful not to disincentivize work. Calls for a universal basic income adequate to live on are understandable given fears of mass AI-induced unemployment, but they should be rejected on the grounds that they would discourage participation in economic life. The "welfare for all" model of a large middle-class entitlement state should also be rejected on similar grounds.

Third, the United States should maintain a strong safety net for those who truly need it. Rapid technological change overhauls enormous sectors of the economy, displacing workers who no longer have the education, experience, or skills needed to pursue opportunities in remaining or emerging industries. As noted above, the welfare policies set in place during the 20th century helped moderate the disruptive impact of the digital revolution. Policymakers should keep that example in mind when confronting the impact of generative AI.

These three principles can help guide us through the early stages of the policy debate, separating the wheat from the chaff. Policies to pause AI development should immediately be rejected as unrealistic. Those designed to "level the playing field" between workers and machines by increasing the tax rate on capital should be dismissed as efforts to slow down AI adoption, as should calls to increase the bargaining power of workers for the same purpose. Calls for greater use of antitrust measures to combat the perceived political influence of companies developing new technologies represent not only an abuse of antitrust authority, but a threat to long-term prosperity; they, too, should be turned down.

So much for the chaff. But what about the wheat?

To start, Congress should substantially increase funding for basic research and leave the decisions of what to study and what innovations to attempt to the researchers themselves. Lawmakers should also ensure that the full cost of investment in research and development is tax deductible for the year the investment was made, as was the case for decades prior to the 2017 tax law. Changing the way our tax system treats these investments would boost both wages and economic output.

Governments at all levels should also support entrepreneurship to help ensure that businesses use new AI-based technologies to increase worker productivity and create new goods and services for consumers. Reforming occupational-licensing restrictions would remove a barrier to entry for many well-paying jobs, enabling new businesses to form. Policies to help small businesses obtain startup financing could also encourage business formation. Since immigrants are more likely to become leaders in the science industry and to start new businesses than native-born workers, increasing the number of highly skilled immigrants allowed to work in the United States would help businesses adopt new technologies. At a minimum, foreign-born science and technology graduates of American universities should be offered green cards.

Beyond encouraging entrepreneurial activity, policymakers should ensure that Americans are prepared to compete in an economy shaped in part by advances in generative AI. This will require reforms to K-12 education. State and local policymakers should lengthen the school year and help all of their young residents graduate high school. Curricula should focus on staples like math and reading, as well as fostering creativity, interpersonal skills, and other abilities at which machines do not excel.

Policymakers should also adopt better training programs for adult workers who are displaced from their jobs by technological change. Traditional government-run job-training programs have had disappointing results, but newer work-based training models show promise. Apprenticeships, for example, allow workers to combine classroom learning and credentialing with on-the-job training. Since employers wouldn't hire an apprentice unless that apprentice fulfilled a business need, workers in these programs would be making real contributions to their local economies. And because the market is shaping the curriculum, those workers would learn skills that local businesses find valuable. To encourage employers to partake in such programs, policymakers should grant them exemptions from certain labor-market regulations that could pose barriers to their participation.

Additionally, evidence shows that some sector-focused employment programs generate large and persistent earnings gains for workers — especially when they focus on workforce mobility and transferable skills that can be credentialed. Like K-12 education, these training programs should focus on skills that complement rather than compete with technology.

Better use of the nation's community colleges, meanwhile, can retrain workers and help young people build the skills necessary for well-paying jobs in a changing economy. Additional resources for community colleges should be made available based on institutional outcomes, including completion rates and the share of students enrolled in programs teaching marketable skills. Community colleges that have access to more resources and are more focused on business needs could expand opportunities for mid-career training, leveraging relationships between community colleges and local employers. They could also offer more opportunities to non-college-educated workers.

In the near term, the AI revolution could reduce the wages that some workers can command in the labor market. In order to make work pay, policymakers should expand the earned-income tax credit (EITC) — the refundable tax credit offered to low-income and working-class individuals and families. Because the credit is only available to individuals who are employed, previous EITC expansions have increased participation in the workforce, putting many recipients on the path to higher wages and self-sufficiency. The credit also lifts millions of Americans — including several million children — above the poverty line each year. To advance the value of participation, policymakers may need to think about offering the credit to higher-earning households if the scope and scale of short-term disruption merits it.

At the same time, safety-net programs like disability insurance need to be reformed so that people who can work, even in a limited capacity, are able to do so. Policymakers need to change how people access health care to allow greater mobility between jobs — the R Street Institute's C. Jarrett Dieterle has outlined what that might look like in these pages. They should also reconsider regulations that pose a threat to business formation (which, in turn, pose a barrier to employment) and reform or repeal high-minimum-wage laws that weaken workforce participation among low-wage workers, particularly as the relative cost of capital investment falls.

Finally, AI-related concerns about plagiarism, privacy, and the ownership of intellectual property are legitimate, and policymakers need to address them. But they can do so in a way that does not become a major roadblock to developing and diffusing generative AI tools. These concerns should not be used as an excuse to slow or pause AI development.

Guided by these principles, policymakers can moderate the AI revolution's short-term disruptions while preserving its long-term benefits, all while ensuring that as many people as possible can enjoy the dignity that comes from earned success.

A BETTER WORLD

The year 2023 will be remembered as a turning point in history. The previous year, humans and machines could not converse using natural language. But in 2023, they could.

Many greeted this news with wonder and optimism; others responded with cynicism and fear. The latter argue that AI poses a profound risk to society, and even the future of humanity. The public is hearing these concerns: A YouGov poll from November 2023 found that 43% of Americans were very or somewhat concerned about "the possibility that AI will cause the end of the human race on Earth."

This view ignores the astonishing advances in human welfare that technological progress has delivered. For instance, over the past 12 decades, child mortality has plummeted thanks in large part to advances in drugs, therapies, and medical treatment, combined with economic and productivity gains. Generative AI is already being used to develop new drugs to treat various health conditions. Other advances in the technology will mitigate the threat of a future pandemic. AI is helping scientists better understand volcanic activity — the source of most previous mass-extinction events — and to detect and eliminate the threat of an asteroid hitting the earth. AI appears more likely to save humanity than to wipe it out.

Like all technological revolutions, the AI revolution will be disruptive. But it will ultimately lead to a better world.