The New American System

Thirty years ago, during the 1984 Super Bowl, Apple presented what has become widely cited as the greatest television commercial in history. The ad was titled "1984" and began with gray imagery of crowds marching in unison to stare at a huge screen where a Big Brother figure gives a speech. A woman in bright colors runs through the crowd, chased by riot police, and smashes the screen with a huge hammer. Only then does the Apple logo appear with the announcement of the original Mac.

I was watching the Super Bowl that year on a primitive large-screen TV with dozens of other students in the dining hall of my dorm at the Massachusetts Institute of Technology. The group was transfixed, and then erupted in cheers when we realized this was an ad for a computer. It was as if, in the midst of all the beer and car ads, somebody was speaking directly to us.

Students one generation earlier would likely have seen this as symbolizing a revolt against an oppressive and almost irresistible "system." For us at MIT in those years, it was worse. Like most young people, we found many existing arrangements in need of change. But many of the major institutions in American life had begun to seem not so much fearsome as decayed and ineffective.

A number of people in that dining hall went on to help create what came to be called the new economy. It is hard to overstate the degree to which many of us at the time saw ourselves as trying to survive in the wreckage of an American economy that seemed to have lost its ability to innovate.

In fact, American innovation has always proceeded in cycles in which pessimism and near panic act as the emotional impetus for creative advances that yield a new economic order that thrives for a time and then loses its edge. That cycle has been essential to America's unprecedented economic achievement over the past two-and-a-half centuries, and it is playing out again today.

There is much talk in Washington now about the need for a new era of American innovation to help us break out of an economic rut. But there is far less understanding of the ways in which these cycles of innovation and stagnation have worked, and so of what might be required to revive our economic prospects. By seeing how we've managed, time and again, to remake America into an engine of innovation and prosperity, we can better understand the nature of the challenge we now face, the character of the opportunities we may have for addressing it, and the kinds of responses that are most likely to work.

THE AMERICAN SYSTEM

Gross domestic product per capita is the fundamental measure of a society's material standard of living over time. By this measure, the American colonies started poor but began getting richer very quickly. By 1820, the United States had achieved a level of GDP per capita roughly equal to that of Western Europe. By 1900, it had achieved a decisive global advantage compared to all potential strategic rivals. It has maintained a great advantage on that front ever since. Though Europeans certainly live very well, and though both China and India are rapidly improving their citizens' standards of living, the United States remains indisputably ahead.

How has America achieved and sustained these great gains in living standards? By definition, GDP per capita is equal to GDP per work-hour multiplied by the number of hours worked per capita. Because there are only 24 hours in a day, the only way to increase living standards indefintely is by increasing the GDP per work-hour part of the equation — that is, by increasing labor productivity.

There are really only two ways to increase labor productivity. The first is to increase our use of other inputs like land or equipment. The second is to invent and implement new ideas for getting more output from a given set of inputs — that is, to innovate. In 1957, economist Robert Solow of MIT published the first modern attempt to measure the relative contributions of additional inputs versus innovation to increasing labor productivity. He looked in particular at the United States between 1909 and 1949 and estimated that only about 12.5% of all growth in output per work-hour over that period could be accounted for by increased use of capital; the remaining 87.5% was "attributable to technical change," or innovation.

Intense debate has followed in the long wake of Solow's conclusions, for which, along with related work, he was awarded a Nobel Prize. The participants in that debate have often offered arguments in which deep ideological beliefs have been passed off as technical assumptions, but in spite of these disagreements, there is widespread scholarly consensus that (as common sense would indicate) innovation, broadly defined, has been central to increasing American living standards. In summary, the root of American economic success hasn't been luck, or land, or conquest; it has been innovation.

The nation's approach to achieving innovation has varied with the times, but it has generally demonstrated an almost ruthless pragmatism in implementing the core principles of free markets and strong property rights, overlaid with decisive government investments in infrastructure, human capital, and new technologies.

Free markets and aggressive public investments in infrastructure exist, of course, in some tension. Generally speaking, the underlying system of economic organization in the United States has been not only a free-market system but one that is among the freest in the world. Independent economic agents own private property and engage in only loosely regulated contracting. Highly distributed trial-and-error learning motivated by enlightened self-interest has always been the key driver of innovation. The government's role is mostly that of an armed referee rather than a participant in the economy. In this sense, America's underlying innovation policy has been "no policy."

But significant government overlays have always existed to reinforce our free economy. Indeed, the federal government has been active in shaping specific kinds of innovation since the first months of the republic, when Alexander Hamilton published his epochal 1791 Report on Manufactures. The Report proposed subsidies and protections for developing manufacturing industries — the high-tech sector of its day — to be paid for by tariffs.

The debate about these recommendations was strikingly modern. Hamilton shared in the reigning American consensus in favor of free markets, but advocated for an exception in the case of manufacturing. His case was rooted in sophisticated externality arguments. Manufacturing, he argued, would allow for a far more efficient division of labor and better matching of talents and capacities to occupations, would create an additional market for agricultural products, and would encourage immigration to further extend each of these benefits. All of this would be immensely useful to the new nation, and it was only sensible for the government to actively encourage it. The opponents of Hamilton's plan emphasized the public-choice problems with subsidizing specific sectors and businesses, especially the potential for corruption and sectional favoritism. Broadly speaking, the tariffs were implemented, but not the subsidies. In fact, the tariffs quickly became much higher than those Hamilton had proposed, as political constituencies grew up around them.

Despite his suggestions being only partially implemented, Hamilton's basic insight — that the enormous economic value that innovative industries could offer the nation merited public efforts to enable their success — has always had strong adherents in national politics. In the decades leading up to the Civil War, for instance, the federal government intervened strategically in markets to spur innovation, immediately and frequently exercised its constitutionally enumerated power to grant patents, and even encouraged and protected Americans who stole industrial secrets from Britain — at the time the world leader in manufacturing technology.

Much of the motivation for such policies was grounded in military priorities. The West Point military academy was founded in 1802 in large part to develop a domestic engineering capability, and armory expenditures stimulated the growth of an indigenous manufacturing capability that by 1850 had in some sectors become the most advanced in the world. Other significant investments in infrastructure included financing, rights of way, and other support to build first canals and then railroads, which were essential to driving productivity improvements. The internet of the era was the telegraph, and it too benefited from public support early on. In 1843, Congress allocated the money to build a revolutionary telegraph line from Washington, D.C., to Baltimore that pioneered many of the important innovations — such as suspended wires — that would come to be used to build out the national telegraph network and later the telephone network.

Henry Clay called this program of tariffs, physical infrastructure, and national banking "The American System." Its goal was to transform the United States from a group of sectionally divided agricultural states tightly linked to the British manufacturing colossus into a unified, dynamic industrial economy. Abraham Lincoln, who identified himself as "an Old-line Henry Clay Whig," accelerated this process dramatically, both because of the exigencies of war and because the Southern-based opposition to the program was no longer in Congress during the Civil War years. The federal government moved aggressively. It expanded the infrastructure of railways and telegraphs, increased tariffs, established a system of national banks, founded the National Academy of Sciences, and established the Department of Agriculture and a system of land-grant colleges that ultimately created agricultural experiment stations to promote innovation on farms.

Federal investments in biology and health innovation began to accelerate rapidly in the late 19th century. A set of Navy hospitals with origins in the 1790s was organized into the Marine Hospital Service in 1870, and Congress allocated funds for the study of epidemics, with particularly significant innovation occurring in the study of malaria. This organization ultimately became the 20th-century Public Health Service and spawned the Centers for Disease Control and Prevention. Congress also established the Laboratory of Hygiene in 1887, which eventually became the National Institutes of Health.

A direct line ran from Hamilton to Clay to Lincoln, and this approach — a free-market base overlaid with specific interventions to provide infrastructure and to promote incremental, innovation-led growth — was the pattern for roughly the century that followed Lincoln's assassination. But as a political matter, it became increasingly populist over that time. Hamilton was often attacked as a pretentious would-be aristocrat. Though Clay lived as a gentleman, he made hay of his humble origins and is often credited with inventing the term "self-made man." Lincoln was born in a log cabin and had a public image that was the opposite of aristocratic. The political energy behind this program remained nationalistic, but became increasingly focused on upward mobility, social striving, and maintaining the long-term legitimacy of the economic regime with the promise of opportunity for all.

This trend continued into the last century. Consequently, from roughly 1870 to 1970, the goals of conquering disease, educating the unschooled, and winning wars provided the strongest impetus for government investments in innovation. This remains central to the American ethos, and in certain respects the Progressive Era and the New Deal can be seen as extensions of this program, in which egalitarian ideas played an ever-increasing role.

This approach interacted with the rise of the mass institutions of mid-20th-century American life to create an innovation system that, in retrospect, was unusually centrally directed by American standards. World War II, of course, saw a level of defense-led government investment in technology that was unprecedented. At the conclusion of the war, Vannevar Bush — who was dean of engineering at MIT, founder of the defense contractor Raytheon, and founding director of the federal Office of Scientific Research and Development through which almost all military R&D was carried out during the war — authored the July 1945 report "Science: The Endless Frontier." The document laid the groundwork for the Cold War-era system of government sponsorship of science and engineering.

What is most immediately striking about this report to a contemporary reader is that Bush found it essential to define and defend federal investments in basic research. Previously, the United States had relied predominantly on exploiting fundamental scientific discoveries made in Europe, but in the post-war world, the nation would have to forge ahead on its own. In the same era, the G.I. Bill at the federal level, combined with increased spending at the state level, democratized access to higher education. Just as much of the rest of the world was catching up to America in secondary education, the United States began to pursue mass higher education. This created the pool of people who would become known as the "knowledge workers" of the new economy.

Another striking thing about the Bush report was its emphasis on large institutions — both public and private. He lived in and described a world of mammoth organizations, centralized coordination, and scale economics. These were the institutions that had won the war and would win the peace. America in the wake of the Depression and the war exhibited a faith in such large institutions that was unmatched in our country before and has not been seen since.

The big-institution approach that Bush described and then helped to orchestrate was an incredibly successful program for innovation that created the conditions for the growth of the information economy, as well as much of the aerospace and biomedical industries and related fields. But it also planted the seeds of its own obsolescence (or at least a drastically reduced relevance). Ironically, the new economy that this post-war system helped to create has little room for the post-war system itself. Innovation in the new economy looks quite different, a fact that America is still struggling to understand.

WHAT I SAW AT THE REVOLUTION

I stumbled into a career at the heart of technology innovation just as this transition was occurring, when I joined the data-networks division of AT&T's Bell Labs in 1985. Data networks were basically combinations of devices called modems that allowed computers to send and receive data over regular phone lines. It was one little corner of the information revolution, but its transformation illustrates some very broad trends, and ultimately led me to participate in the invention of what has become known as "cloud computing."

In the 1980s, we were building from a technology and business base that had roots that were at best semi-capitalist. For decades, the Department of Defense was the primary customer for innovative information technology. The technology itself was mostly developed by government labs, universities, defense contractors, and AT&T's Bell Labs. From World War II through about 1975, this public-private complex was at the frontier of innovation, producing (among many other things) the fundamental components of the software industry, as well as the hardware on which it depended. Government agencies collaborated with university scientists to develop the electronic computer and the internet. The Labs invented the transistor, the C programming language, and the UNIX operating system.

One of the many important spinoffs of the seminal 1950s SAGE air-defense system was the mass-produced modem, developed by Bell Labs. The Labs set the standard for modems and basically owned the market for decades. But in 1981, an entrepreneur in Atlanta named Dennis Hayes (the son of a Bell telephone-cable repairman and a telephone operator) created an improved modem. His key innovation was a patented signaling algorithm that allowed computers to connect without manual intervention. Combined with the advent of personal computers and the hobbyist computing culture, this innovation enabled the creation of electronic bulletin boards and, eventually, consumer dial-up access to the internet.

Within a few years, Hayes began to push data-transmission speeds faster than had been thought feasible. By 1985, modem speeds were eight times faster than in 1981, and for the first time an independent entity had created the new modem speed standard before the Bell system. Hayes had become a significant competitor, and AT&T was lethargic and in denial.

I had just joined AT&T, and can vividly recall analyzing our position and pointing out that Hayes could deliver a product that was both much cheaper and technically superior on every measured attribute. Our expensive, unionized manufacturing facilities couldn't keep up with a handful of people in what was basically a garage. Our vaunted technical staff couldn't get higher-speed designs out the door as fast. Our market research, planning, analysis, and other functions were basically just expensive overhead doing abstract research about people they didn't understand, while our smaller competitors lived in the hobbyist world and understood users' needs directly from experience.

These were symptoms of a larger transition that was not unique to the data-networks industry but was occurring throughout the entire technology sector. The PC revolution was transforming all of computing in a consciously democratized and decentralized way. This began the ascendance of Silicon Valley over all other technology centers, with its more open, freewheeling start-up culture. Financial innovators both invented so-called "rocket-science finance" and began the process of using debt and equity markets to break up and transform huge American manufacturing companies. All were extremely profitable activities.

Strategy consulting was a new industry, mostly created by people with science and engineering degrees who wanted to stimulate and directly profit from rapid change in large organizations to an extent that was typically infeasible as employees of the companies themselves. The industry began to grow explosively. Increasingly, the best science and engineering graduates were drawn to start-ups, finance, and strategy consulting. The center of gravity of innovation moved decisively from the behemoths of the post-war era to newer, more nimble competitors.

A Defense Science Board report published in January 1987 quantified the resulting transformation of the industry, noting that commercial electronics such as computers, radios, and displays were one to three times more advanced, two to ten times cheaper, five times faster to acquire, and altogether more reliable than their equivalents from the Department of Defense. Extrapolating from the report, professor Steven Vogel correctly anticipated that "commercial-to-military 'spin-ons' are likely to boom while military-to-commercial 'spin-offs' decline." In a complementary report published a month later, the Board acknowledged that "the Department of Defense is a relatively insignificant factor to the semiconductor industry" that it had originally midwifed.

Why did Dennis Hayes choose not to go to work for Bell like his parents when he saw a way to vastly improve modems? And why did so many others make similar decisions? There were several, mutually reinforcing causes.

First, the cultural revolution of the 1960s and '70s elevated independence and iconoclasm at the expense of the organization man. That ethic was essential to the early culture of the information-technology boom, and has in many respects remained quite important.

Second, information technology radically lowered many kinds of economic transaction costs, so efficient firm size became smaller for many of the most important industries most essential to economic growth. While industrial technology rewards controlled scale, information technology rewards decentralized networks. As a result, the smaller, insurgent information-technology firms had different internal systems and cultures that were less overtly hierarchical and more decentralized.

Third, the science and technology that undergirded these innovative sectors worked to the advantage of this new model. It took huge, integrated organizations to do things like build out the national telephony network, but small groups could exploit those achievements to facilitate other technologies, like the consumer modem.

Fourth, the same industry trends also allowed those who were creating improvements to secede from huge firms and form independent companies, using equity and equity-like vehicles to extract greater economic value. This caused a much bigger spread in compensation across companies than is practically possible within most large, traditional organizations. Thus, for those who believed they could create identifiable shareholder value, there were enormous financial incentives to migrate to start-ups, consulting firms, and new types of financial firms like private-equity shops. This created a virtuous cycle: As these firms grew more culturally attractive and remunerative, they increasingly attracted the strongest graduates, which reinforced both their economic and cultural appeal.

Fifth and finally, financing became far more available with the rapid growth of the venture-capital industry, which itself was very much part of this new world. An entire ecosystem of investors, lawyers, accountants, and even landlords (who would take equity instead of cash for initial rent) arose to encourage a venture-backed sector with the basic job of disrupting and displacing big, established companies.

Even to a naïve 23-year-old, it was obvious which way the wind was blowing. I left AT&T after a little more than a year to join a small group that had spun off from the Boston Consulting Group, a strategy-consulting pioneer. It was a new kind of business, focusing on employing computer data-analytics capability to enable improved strategy consulting. The firm grew rapidly, went public, and was eventually sold.

By the late 1990s, I had come to believe that information technology had advanced sufficiently to allow the development and delivery of software to provide certain kinds of very sophisticated business analysis that was better, cheaper, and faster than what was possible through human services. A wave of innovation was sweeping the IT sector and through it the larger economy, and I found myself in a position to try to ride it.

TRIAL-AND-ERROR INNOVATION

In 1999, two friends and I started what has now become a global software company with this intention. We began developing software and quickly found our first test customer. But we soon confronted the practical challenge of actually delivering the software to the customer for use. Installing large-scale software for a major corporation was complicated and expensive. Engineers had to go out to the customer's data center and load software onto computers. Large teams of people connected this software to the rest of the company's information systems, and many other people maintained it. The cost of installation and support was often many times greater than the cost of the software itself.

We used the software-development tools that were current as of 1999. Generally, these were designed to allow access via the internet, but this was entirely incidental to our work since we assumed we would ultimately install our software in the traditional manner. When we delivered a prototype to one early customer, that customer didn't have IT people to install it, so we allowed the company temporary access to our software via the internet — that is, they could simply access it much as they would any website.

As they used it, two things became increasingly clear. First, the software made their company a lot of money. Second, despite this, their IT group had its own priorities, and it would be very difficult to get sufficient attention to install our software anytime soon. Our customer eventually floated the idea of continuing to use our software via the internet while paying us "rent" for it. We realized we could continue this rental arrangement indefinitely, though this would mean less up-front revenue than if we simply sold the software.

We were running low on money and had few options. Our backs to the wall, we theorized that eliminating the installation step could radically reduce costs, particularly if we designed our whole company around this business model rather than how traditional software companies were organized. Our engineering, customer-support, and other costs could be much lower because we wouldn't have to support software that operated in many different environments. Sales and marketing could be done in a radically different, lower-cost way when selling a lower-commitment rental arrangement. We experimented with this approach with our first several customers. Eventually we made it work, and we committed to it. But this decision was highly contingent: It was the product of chance, necessity, and experimentation.

At about the same time, unbeknownst to us, a few dozen other start-up companies were independently discovering the advantages of this model. The key was to design new software from the start with this environment in mind, and to design the business process of the software company — how the sales force was structured, how the product was priced, how customer support was delivered, and so on — for this new environment as well. By about 2004, the delivery of software over the internet — by then renamed "software as a service," or SaaS, by industry analysts — was clearly a feasible business model. It is now one key component of cloud computing.

The SaaS model is today seizing large-scale market share from traditional software delivery. In 2010, industry analysts estimated that SaaS would continue to grow five times faster than traditional software and that 85% of new software firms coming to market would be built around SaaS.

Many things about our software company turned out differently than we had expected. Our settling on the SaaS delivery method is just one example and in fact was not even the most crucial decision we made; it is just a simple one to explain. Ultimately, what allowed us to succeed was not what we knew when we started the company, but what we were able to learn as we went.

The innovations that have driven the greatest economic value have not come from thinking through a chain of logic in a conference room, or simply "listening to our customers," or taking guidance from analysts far removed from the problem. External analysis can be useful for rapidly coming up to speed on an unfamiliar topic, or for understanding a relatively static business environment. But analysts can only observe problems and solutions after the fact, when they can seek out categories, abstractions, and patterns. Our most successful innovations have come almost without exception from iterative collaboration with our customers to find new solutions to difficult problems that have come up during the course of business. At the creative frontier of the economy, and at the moment of innovation, insight is inseparable from action.

More generally, innovation in our time appears to be built upon the kind of trial-and-error learning that is mediated by markets. It involves producers and providers trying different approaches with relatively few limits on their freedom to experiment and consumers choosing freely among them in search of the best value. And it requires that we allow people to do things that might seem stupid to most informed observers — even though we know that most of these would-be innovators will in fact fail. This is an approach premised on epistemic humility. Because we are not sure we are right about very much, we should not unduly restrain experimentation.

To avoid creating a system that is completely undisciplined, however, we must not prop up failed experiments. And to induce people to take such risks, we must not unduly restrict huge rewards for success, even as we recognize that luck plays a role in success and failure.

This new paradigm for innovation almost always boils down to figuring out how to invent and use information technology creatively to re-engineer an ever-expanding range of activities. This isn't surprising, as Moore's Law (which observes that computer-chip performance doubles approximately every 18 months) points to by far the most sustained and significant increase in fundamental technical capacity over the past half-century. The institutional arrangements that have served to enable this wave of innovation consistently exhibit a four-part structure: (1) innovative entrepreneurial companies, (2) financed by independent investment firms, (3) competing and cooperating with established industry leaders, and (4) all supported by long-term government investments in infrastructure and R&D.

This combination characterizes large swaths of the key information-technology and biotechnology industries. Together, these two industries represent about 80% of cumulative U.S. venture-capital commitments over the past 30 years. And their success has not only benefitted those directly involved but has also yielded enormous advantages for Americans in general. According to the National Venture Capital Association, as of 2010 about 11% of all U.S. private-sector jobs were with venture-backed companies, including 90% of all software jobs, more than 70% of all semiconductor and biotechnology jobs, and about half of all computer and telecommunications jobs.

This new approach to innovation does not exclusively apply to "high tech" sectors. In fact, perhaps the most important recent example of unexpected innovation following this approach has involved the extraordinarily quick and unexpected transformation of our energy economy — a transformation that has run directly contrary to what had long been the government-led strategic approach to energy innovation.

THE ENERGY REVOLUTION

America's dependence on imported fossil fuels is widely acknowledged to be a source of many serious problems — from the enormous military expenditures required to keep supply lines open in dangerous parts of the world to the dangers of pollution and the threat of climate change.

As recently as five or six years ago, these difficulties seemed intractable, as the rapid development of alternative energy sources seemed the only way out of our dependence on foreign oil, and such development seemed nowhere in sight. In 2008, renewables provided about 7% of all American energy, up less than one percentage point from their contribution a decade earlier. Nuclear-power use was also flat over that decade at about 8%, leaving the lion's share — about 85% — of all American energy to be provided by fossil fuels. That same year, the International Energy Agency reflected widespread conventional wisdom when it projected that U.S. oil and natural-gas production would remain flat or decline somewhat through about 2030, therefore necessitating ever-growing imports. Despite all of the talk and plans, no progress at scale seemed to be feasible.

In the last few years, however, a technological revolution in the extraction of so-called unconventional fossil fuels has transformed this situation with breathtaking speed. The most important technology has been hydraulic fracturing, often called "fracking," but other important developments have included tight-oil extraction, horizontal drilling, and other new applications of information technology. Since 2006, America's output of crude oil, natural-gas liquids, and biofuels has increased by about the same amount as the total output of Iraq or Kuwait, and more than that of Venezuela. America is expected to become a net exporter of natural gas in two years. The current IEA prediction is that America will be the world's largest oil producer within five years and that, by 2030, North America will be a net oil exporter.

For Americans, the benefits of this change are enormous. First are the geopolitical benefits: North American energy independence would not mean that we would no longer have important interests in the Middle East, Africa, and elsewhere, but it would mean that we would no longer be negotiating under duress.

Second are the environmental benefits: These new technologies have yielded a net shift from coal to gas in our economy. Because natural gas produces about half the carbon dioxide that coal does, and almost no soot, this has both long-term global climate benefits and immediate pollution benefits. Since 2006, carbon-dioxide emissions have fallen more in the United States than in any other country, both because of the switch from coal to gas and because of increased energy efficiency driven by both regulation and market forces. This change appears to be structural: The 2013 baseline projection through 2040 by the Energy Information Administration of the U.S. Department of Energy forecasts that American "[e]nergy-related CO2 emissions [will] never get back to their 2005 level." And the more prosaic reduction in soot pollution caused by the partial substitution of gas for coal matters a lot, given that, while climate change gets the headlines, coal causes an estimated 6,000 to 10,000 deaths per year in America today.

Third are the economic benefits: About half of America's merchandise trade deficit in recent years has been petroleum, so the energy revolution will materially reduce our chronic trade deficit. In fact, the United States already halved net oil imports between 2006 and 2012. This change will also create more high-wage, non-college jobs in the energy sector, as well as many other jobs in other sectors made possible by this growth. Additionally, we are now creating so much shale gas that U.S. natural-gas prices are about one-third the level of those in Europe and one-fifth of those in Japan. These lower energy prices materially increase the competitiveness of American industry and thereby create opportunities for more high-wage, non-college jobs through partial re-industrialization. These kinds of jobs should not only help employment on average, but should also reduce income inequality. Citigroup estimated in 2013 that within seven years these changes should combine to add about 3% to GDP, create about 3 million more jobs, and reduce the trade deficit by about 2% of GDP.

This energy revolution is not a panacea. Fracking imposes environmental costs and, as with all innovations, almost certainly carries risks that can only become fully apparent as it scales up. But on balance domestically produced natural gas represents a huge boon to the United States. It is also best seen not as an end point but as one more step on a long and continuing journey to energy sources beyond fossil fuels.

America has led this technological revolution and as of today stands alone among the major world powers in this regard. A number of factors appear to have contributed to our ability to accomplish this. Some are geological: We have substantial shale deposits and lots of widely distributed water, which is needed in large quantities for fracking. Some are legacy benefits, such as an extensive pipeline infrastructure. But other countries have many of these advantages too. The U.S. has succeeded because of the same combination of structural factors that work together to encourage innovation in information technology: a foundation of free markets and strong property rights; the new-economy innovation paradigm of entrepreneurial start-ups with independent financing and competitive-cooperative relationships with industry leaders; and support by government technology investments. Each of these elements has mattered.

The primary driver has been the regulatory framework of strong property rights and free pricing. Among the world's key petroleum-producing countries, only the United States allows private entities to control large-scale oil and gas reserves. And outside of North America, hydrocarbon pricing is typically governed by detailed regulatory frameworks that are built around the realities of conventional petroleum production. In combination, ownership of mineral rights and freer pricing allows economic rewards to flow to innovators in America. This combination has been particularly advantageous for shale because shale formations tend to have great local variability. This means that the cost and complexity of negotiating with many local landowners can be offset in our country by the benefits of highly localized pricing and investment flexibility, which would be difficult to achieve through simpler but inflexible one-size-fits-all deals with national or provincial governments.

A second major American advantage is the network of independent petroleum producers, oil-field service companies, and specialized financing expertise that this regulatory structure has allowed to thrive in America over the past century. Many of the recent technological advances have been made through trial-and-error and incremental improvements, which map well to a Darwinian competition among a network of independent companies, as opposed to huge one-time projects by industry giants or quasi-governmental organizations.

Finally, the Breakthrough Institute has produced excellent evidence that government subsidies for speculative technologies and research over at least 35 years have played a role in the development of the energy boom's key technology enablers: 3-D seismology, diamond drill bits, horizontal drilling, and others. Government-led efforts that are less obviously related — such as detailed geological surveys and the defense-related expenditures described earlier that enabled the U.S.-centered information-technology revolution, which has in turn created the capacity to more rapidly develop "smart drilling" technology — have clearly also been important.

Teasing out how much this government support has contributed is complicated, of course. We can never know the counterfactual of how the industry would have developed in the absence of this support. But the participation by the very companies that are widely credited with leading the fracking revolution in joint government-industry research projects and tax credits, and testimony by their executives that this was important, are strong arguments that it mattered.

INNOVATION IN THE NEW ECONOMY

The new American approach to innovation has been applied across sectors as diverse as software, biotech, and energy, but it has yet to reach much of America's economy. Its potential to spur further growth and opportunity remains enormous.

Many of the economy-wide reforms most needed to stimulate further American innovation would be familiar in concept to Abraham Lincoln, because they are merely an updated version of the American System. As in Lincoln's day, they are motivated by an enlightened nationalism that seeks growing incomes and widely shared prosperity and opportunity, with direct investments focused on infrastructure, human capital, and new technologies. To make the most of this new American system in our time, policymakers should pursue four basic goals.

First, they should build infrastructure. While many claims about America's deteriorating basic infrastructure are overblown, further investment in roads, bridges, dams, and railways are warranted. According to the World Economic Forum, American infrastructure quality now ranks 23rd best in the world after having been in the top ten less than a decade ago.

There is an adage among infrastructure engineers: "organization before electronics before concrete." That is, we should always do the low-cost but unglamorous work of optimizing current fixed investments before making new ones. But there is also the need for classic big projects. The key remediable barriers to this type of improvement have to do with legal complexity: federal, state, and local regulations; private lawsuits; union work rules; and other legal hurdles. Congress might help to cut the red tape by creating a class of federal "special national infrastructure projects" that would be exempted from numerous regulatory and related barriers, granted presumptive immunity from specific classes of lawsuits, and given expedited eminent-domain rights. Congress should link funding for such projects to special exemptions from analogous state-level and local regulations in those areas that want to benefit from the projects.

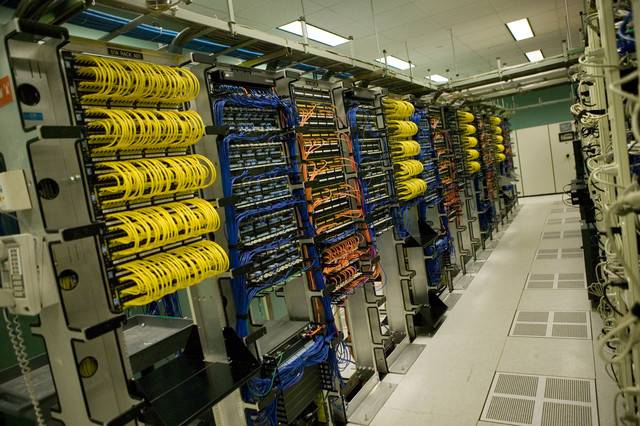

The physical capacity for movement of digital data is the modern version of the telegraph and telephony networks. Development of infrastructure for technologies like these, which are themselves rapidly evolving during the build-out, presents special challenges. We have historically had an approach to this class of infrastructure that combines government investment in visionary projects, financing, right-of-way provision, and standard-setting with a heavy reliance on private-sector competition for the actual build-out. This always seems very messy in the short term, but when technologies are in a state of flux, the combination of public and private tends to create infrastructure better suited to economic success over time.

The crucial unknown today is the relative importance of high-speed fixed-line broadband versus better mobile capacity. Unfortunately, we are leaders in neither technology. America has lower broadband subscriptions per capita than the major Western European economies and ranks 111th in the world in mobile subscriptions per capita. We should err on the side of overinvestment and seek leadership in both.

Second, policymakers should invest in building visionary technologies. America's technology strategy through most of its history was to commercialize the discoveries of European science. We began investing massively in basic research in the post-World War II era only because there was nobody else left to do it. Today, America is the global leader in basic science. Almost half of all the most cited scientific papers are produced in the U.S. But the world is changing, and in 2013 the U.S. represented only about 20% of world GDP and 28% of world R&D spending. Over roughly the past 20 years, the fraction of American scientific papers with a non-American co-author has grown from 12% to 32%. Science is becoming more international again. We should give ground grudgingly but recognize that over time more science will be done outside the United States. We should participate aggressively in research collaborations such as international space-exploration efforts and the European CERN particle-physics facility, and we should fund exchanges and other vehicles to ensure that we gain maximum benefit in return for our basic-research investments.

And we should think differently in this new world about what basic science we conduct here. We should bias basic-research funds not toward those areas that inherently hold the greatest promise, but toward those in which the long-run economic benefits are likely to remain in the United States, because they require the build-up of hard-to-transfer expertise or infrastructure that are likely to generate commercial spin-offs. University and research laboratory rules and the patent system should recognize the long-run desirability of researchers creating private wealth in part through the exploitation of knowledge created by these publicly supported institutions.

More fundamentally, the sweet spot for most government research funding will likely be visionary technology projects, rather than true basic research on one extreme, or commercialization and scale-up on the other. We have a long track record of doing this well and an existing civilian infrastructure that can be repurposed, including most prominently the Department of Energy's national laboratories, the National Institutes of Health, and NASA. Each of these entities is to some extent adrift the way Bell Labs was in the 1980s and should be given bold, audacious goals. They should be focused on solving technical problems that offer enormous social benefit, but are too long-term, too speculative, or have benefits too diffuse to be funded by private companies.

What would this mean in practice for these leading national assets? A few examples may help point to an answer. We should, for instance, return the DOE's labs to a more independent contractor-led model with clearer goals but greater operational flexibility. We might set for one lab the goal of driving the true unit-cost of energy produced by a solar cell below that of coal, and a second lab the same task for nuclear power. We could combine the 27 independent institutes and centers of the NIH into a small number of major programs and task each with achieving measurable progress against a disease. We could task NASA with leading an international manned mission to Mars.

Careful deliberation could lead to different specific targets, but in my experience great technical organizations have a characteristic spirit that starts with goals that are singular, finite, and inspiring. That is, each organization should have one goal. The goal should be sufficiently concrete that we can all know if it has been achieved or not. And it should be sufficiently impressive that people are proud to work toward it, without being so obviously outlandish that it just inspires cynicism. Beyond goals, political leadership has the responsibility for selecting extremely able senior leadership, providing adequate resources, granting operational autonomy, and measuring progress.

Third, we should build human capital. As American statesmen have known for centuries, this is an essential building block of innovation and prosperity. And we are losing our edge.

Human capital is built by a combination of attracting and admitting immigrants who have it, and then helping everyone in the country to further develop their skills and abilities. Our immigration system, for instance, should be reconceived as a program of recruitment, rather than law enforcement or charity. We should select among applicants for immigration above all through assessments of skills and capabilities. Canada and Australia do this now, with excellent results. And exactly as Hamilton argued two hundred years ago, immigration and investments in visionary technology projects will tend to be mutually reinforcing. The projects will tend to attract and retain immigrants that have much to offer the country, and key immigrants will help drive these visionary projects forward.

Better schools and universities are also essential, of course, and they would again reinforce gains made through high-skill immigration, investments in technical projects, and investments in infrastructure. The challenges confronting meaningful education reform, however, seem almost intractable. Addressing them will require a serious commitment to encouraging competition and innovation within education itself.

Fourth and finally, therefore, we must significantly deregulate and encourage competition in the three sectors that have been most resistant to the new American system of innovation: government services, education, and medicine. Today, we treat these three sectors as hopeless victims of Baumol's Cost Disease: the idea that, because they involve services delivered by human beings, they will inevitably grow in relative cost versus those sectors that deliver value through technology. But there is nothing inevitable about this, and all three are too important to be disregarded as lost causes. We cannot just write off the 30% of the workforce that works in government services, education, and medicine from productivity gains, especially given how essential these sectors are to enabling innovation and productivity growth in other parts of the economy.

Driving productivity improvements in these areas, in theory, would simply require allowing the same IT-driven gale of disruptive innovation that has transformed other sectors. The practical reality, though, is that it is very difficult to create in these industries the sorts of incentives that have driven innovation in areas like software or energy. Resources are mostly politically controlled, and various participants use this to protect themselves from disruptive change. This is not entirely cynical. Our moral intuitions about them are very different, for one thing. It is not coincidental that, in the West, schools and medicine were provided for centuries through church-linked institutions by "professionals" — a religious term in its origin, implying that teachers, nurses, and doctors were expected to place service to others ahead of self-interest. And the provision of public services, too, is taken to be different in kind from that of other services in our economy.

But reform in these areas that recognizes these realities is both essential and possible. We should focus on a number of reforms: unbundling our various integrated welfare programs to allow more targeted piecemeal improvements in government services; permitting greater consumer choice in both education and health care; providing useful, standardized outcome measurements to enable more informed consumer decision-making in all these areas; encouraging new market entrants by loosening regulatory constraints and permitting profits; and, finally, funding demonstration projects for innovative application of technologies and methods that produce measurable gains in stated outcomes-per-unit cost. Realistically, however, the best we can hope for is to make the government, education, and health sectors more market-like. They will never be as efficient as other parts of the economy.

Simply making government, education, and medicine function more like markets does not seem like all that tall an order, but at the moment we are surely moving in the other direction — perhaps in medicine most of all. It is important to change course. Transforming government, education, and medicine is essential not only because these sectors play such an important part in our economy but also because they are vital to innovation in every other arena. Today they too often stand in the way of such innovation.

They do so not only by resisting innovation but also by denying it much-needed resources. Ultimately, the money for visionary infrastructure and technology investments will have to come from somewhere. Spending on the core welfare-state programs of health care, education, pensions, and unemployment insurance dwarfs spending on innovation programs. Even small improvements resulting from a modernized welfare system and a more competitive health-care system could free up the resources necessary to invest in our future without increasing taxes or exacerbating our budget problems. Our main limitation in pursuing an aggressive innovation program is a deficit of vision and confidence, not a lack of money.

BUILDING ON OUR STRENGTHS

The new American system of innovation, then, does not apply everywhere and also probably serves to exacerbate some of the social tensions created by inequality. But it has one enormous advantage: It works. It evolved out of necessity when the old system just couldn't move fast enough.

America now lives at the economic frontier, but is no longer an economic hegemon. We cannot improve living standards by copying others' innovations in the way that India or China can. And we are no longer so economically dominant that we can semi-monopolize the world's leading science, technology, management methods, and general economic might. If we want to avoid stagnation, we must keep pioneering new methods of innovation that play to our strengths.

America has again invented a new variation on our unparalleled system for expanding prosperity and opportunity. It holds the promise of again propelling enormous improvements in living standards and charting a path for unmatched success. But if the American miracle is to power our growth for another generation, we need to understand it, and unleash it.